Unicode is a standard way of character encoding which was designed to replace all old encodings S.A. ASCII using the Unicode standard transformation.

ASCII was a way of representing English characters, numbers and some punctuation marks by giving each character a code between 32 and 126 while other codes are unprintable and reserved for special use. E.g. '\b', '\n', '\a', '\v', '\r'.

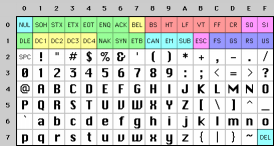

ASCII character set

ASCII character setASCII could be represented in 7-bits but conveniently it's stored in 8-bits.

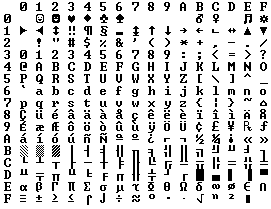

Codes from 128 to 255 can be used for any purpose. But IBM-PC had something called OEM character set which provides the first 128 ASCII character set in addition to some drawing characters. Horizontal bars, vertical bars…

OEM character set

OEM character setHere troubles began. Every one can use Codes from 128 to 255 as he wish. And support his regional language. The matter became worse with Asian languages.

Unicode provides a unique number for every character,

no matter what the platform,

no matter what the program,

no matter what the language.

Originally Unicode was designed to provide a single character set that contains every available character on the Earth. Every character is assigned a value and written as U+XXXX in hex-decimal. So A is assigned a U+0041.

There are some design principles:

Unicode Standard concerns with characters not glyphs. Glyphs are shapes that appear on screen, a printed document.

A character can have more than one glyph.

e.g.: A, A and A are different glyphs to letter A. While Ain (U+0645) (ﻉ ﻋ ﻊ ﻌ) has 4 forms (isolated, initial, medial, final). So drawing these glyphs is the responsibility of text rendering while Unicode standards concerns only with characters representation in memory or other media.Plain text is a sequence of character codes while rich text or fancy text contains additional information such as text color, size, font…

Unicode standard encodes only plain text.Unicode text is stored in their Logical order. When mixing different languages Unicode still preserves logical order.

e.g. : mixing English and Arabic text will be stored in logical order while rendering this text will be according to direction of each language.

Other issue is combining marks (accent in some Latin scripts and vowel marks in Arabic (التشكيل)) follows base characters while in rendering they don't appear linearly.Unicode provides Unification concept that is each character has a unique code. Common letters and punctuation marks are given a unique code regardless of the language.

Conversion between Unicode and other standards must be guaranteed.

In general a single code in other standard maps to a single code in Unicode.

These design principles are not satisfied for Unicode implementation. They are only principles.

Encoding forms for Unicode:

There are two major categories for Unicode encoding:

UTF universal transformation format and UCS universal character set. The major difference between UTF and UCS is that UTF is variable length encoding while UCS is fixed length encoding.

UCS-2

A fixed length encoding consists of 2 bytes sometimes named as plain Unicode.

Let's encode Hello world!

U+0048 U+0065 U+006C U+006C U+006F U+0020 U+0077 U+006F U+0072 U+006C U+0064.

This can be stored as:

Little-Endian: 4800 6500 6C00 6C00 6F00 2000 7700 6F00 7200 6C00 6400.

Or Big-Endian: 0048 0065 006C 006C 006F 0020 0077 006F 0072 006C 0064.

Little-Endian is more common.

UTF-8:

Uses from one to four bytes. All ASCII symbols require one byte.

Two bytes for Greek, Armenian, Arabic, Hebrew…

| Range | Encoding |

| 000000–00007F | ASCII characters, byte begins with zero bits. ( coded as original ASCII encoding ) |

| 000080–0007FF | First byte begins with 110 bits the following byte begins with 10. |

| 000800–00FFFF | First byte begins with 1110 bits; the following bytes begin with 10. |

| 010000–10FFFF | First byte begins with 11110 bits, the following bytes begin with 10 |

E.g.: Value of 0x3D6 01111010110 is encoded in 2-bytes 11001111 10010110.

UTF-16:

Uses 2-bytes or 4-bytes encoding.

More complex than UTF-8, designed mainly to extend the range of UCS-2.

For encoding: all codes above U+FFFF must be encoded in two words a (pair)

E.g. U+23458 :First subtract 10000 = 13458.

Divide the resulting 20-bit into two halves: 0001001101, 0001011000.

Initialize the first 6-bits of the first word with 110110 then add the higher half that is 1101100001001101 or 0xD84D.

Initialize the first 6-bits of the second word with 110111 then add the lower half that is 1101110001011000 or 0xDC58.

Finally the encoding is 0xD84D 0xDC58.

For safety values from U+D800 to U+DBFF and U+DC00 to U+DFFF are not available to represent characters in 2-byte format (used to represent values higher than 0xFFFF).

Java supports UTF-8 in normal programming through InputStreamReader and OutputStreamWriter.

To create a string literal in C you use char str[] = "Hello world!" which is encoded as ASCII to create a literal encoded in UCS-2 use char str[] = L"Hello world!"

Here are some values from Unicode:

| A A A | Letter A | U+0041 |

| AB | Letter A + Letter B | U+0041 + U+0042 |

| a | Small A | U+0061 |

| ﻫ ﻪ ﻬ ﻫ ﻪ ﻬ | Letter Heh | U+0647 |

| َُ | Damma ضمه | U+064F |

| نُ | Noon + Dammaنون + ضمه | U+0646 + U+064F |

The Unicode Consortium is a non-profit organization that is responsible for Unicode's development.

Try playing with Unicode encodings create a simple html file maybe with hex editor write 0048 0065 006C 006C 006F 0020 0077 006F 0072 006C 0064 and view the file using a browser and changing the browser's character encoding.

Finally this article was so long than I expected. I couldn't cover every thing I want. You can read THE UNICODE STANDARD book versions 3.0, 4.0 and 5.0.

You can refer to www.unicode.org for more info.